One of the key features of complex networks is that they capture interactions which have no limitations. In most electronic systems, be they Facebook, emails or web pages, we can make connections across the world with little if any cost.

However what if there are constraints on the links made in a network? Surely we should change the way we study networks if space, time or some other constraint is having a significant effect on the formation or use of the network. This has been a major interest of researchers over the last few years. Space is one obvious limitation as in some cases long distance are less likely to be made. There has been a lot of work in this area over many decades.

It is only more recently that the role of time in networks has began to receive more attention. A lot of this recent interest in how to deal with networks where the connections, are made at one time. That is because most communication networks, emails, phone calls and so forth, are of this type.

Yet networks are made of two parts: vertices and edges. The recent work is focussed on the case where it is the vertices, not the edges, which are created at a definite time. In such temporal vertex networks, causality forces the interactions between nodes to always point in one direction. For example consider a citation network formed by academic papers. The nodes in our network are the academic papers and the links are formed by their bibliographies. So if paper A refers to another paper B then we can be (almost) sure that A was written after B. Information can therefore flow only from B to A. In fact any set of documents can only refer to older ones such networks are common. In law, judges refer to previous judgments to support their arguments. When registering a patent, prior art needs to be cited, that is other previously granted work which may have some relevance to the current claim.

The same types of structure occur in several other situations. Any situation where there is a logical flow has the same causal structure. If we have a project where the nodes represent individual tasks then an edge from task S to task T could represent the fact that task T requires task S to have been completed before task T is started. This has been the focus of work on temporal vertex networks in computer science. The logical flow of a mathematical argument or an excel spreadsheet show the same properties. These networks define what is called a partially ordered set or poset and it is under this title that one finds relevant work coming from mathematicians. A final example comes from the Causal Sets approach to quantum gravity. Here space-time is discrete not continuous, and these discrete points are the nodes of the network. The nodes are connected by edges only if they are causally connected and causality again gives these a common direction.

All of these temporal vertex networks have a key property. That is they contain no loops if you always follow the direction on the edges. You can not go backwards in time. Thus the traditional name for such a network is a directed acyclic networks or DAG for short.

So the question is how can we adapt traditional network measures to deal with the fact that these networks, DAGs, are constrained by causality? Are there new measures we should employ which give more insights to such networks?

One feature of a DAG we have been exploiting is that if we always follow the direction of the arrows, the direction of time, then not all nodes are connected. If we like we could add edges whenever there is such a path connected a late node to an earlier one, a process known as transitive completion. On the other hand we could remove as many edges as we can while leaving the causal relationships intact, a process known as transitive reduction. That is, if there is a path between two nodes in the network before transitive reduction, then there will still be a link afterwards.

What we have done (in Transitive Reduction of Citation Networks) is look at how real data from citation networks behaves after transitive reduction. We find that different types of citation network behave very differently. The citation network formed from academic papers taken from the arXiv repository and the network of US Supreme Court judgments both show that about 80% of the edges are not needed to retain all the causal relationships. On the other hand the patents network shows the opposite behaviour with all but 15% of edges being essential. The edges removed tend to be the citation to older papers. One interpretation is that academics and and judges may be citing well known early papers and judgments though their current work is only directly related to more recent documents. Perhaps some of these citations do not indicate the early work was needed but reflect other motivations, such as simple copying of popular papers or review in the field which at best only have general relevance.

The number of citations to a document after transitive reduction certainly gives us a different view of the importance of different documents. For instance paper hep-th/9802109 on the arXiv (Gauge Theory Correlators from Non-Critical String Theory by Gubsner et al.) was cited by 1641 papers in the network, but only three citations remained after TR! On the other hand, paper hep-th/9905111 (Large N Field Theories, String Theory and Gravity by Aharony et al.) has also large number of citations in the raw data, 806, yet after transitive reduction it has 77, so retaining far more of its original citations. Perhaps information in the second paper was used more diversely.

We can find similar examples in the US Supreme Court citation network. The case Schneider vs. New Jersey (1939)’ has 144 citations in the original data but this drops to just just one after transitive reduction. Stromberg vs. California (1931) also falls from 132 citations to just one. Conversely, the case Heller vs. New York (1973) only shows a slight fall after transitive reduction, falling from from 68 to 48 citations and has the most citations in our reduced network. The second most cited case after transitive reduction is Hamling vs. United States, which drops from 68 to 38 citations. Wikipedia lists hundreds of Supreme Court cases but the last two are not famous enough to make the Wikipedia list. The analysis suggests they may have more importance than a simple citation count would suggest. At the very least it might be be worth checking out documents that are highly cited in the essential.

Another way to look at citation networks is to see if we can define a dimension to the network. That is we can try to quantify how much variation there is in the citation process. A low dimension means that there are few directions , few distinct themes relevant for citation in a document. A high dimension indicates that there is a wide range of relevant but distinct directions from which a document will draw on for inspiration. We were often able to assign an interesting value for the dimension of our citation data. For academic papers, we found that different fields of research have different dimensions. For papers in the hep-th arXiv section (largely string theory) we generally find a low dimension of around 2 while for theoretical papers closely linked to particle physics experiments we find more variation as indicated by a higher dimension of 3. The quant-ph also around 3 while the astro-ph section had a slightly higher dimension of around 3.5. So clearly despite similarities in the main data using standard measures, our time-aware dimension measures show clear differences in the citation behaviour of different areas. String theory in particular seems to be a tightly knit collection of work with each work largely dependent on all the other work, few independent directions can be pursued. The US Supreme Court judgments were more complicated. Small samples (usually from modern judgments) showed a dimension of around 2.5 to 3 but larger samples, typically ranging from modern to the earliest judgments, had lower dimensions, closer to 2. We interpreted this as reflecting the way that there were few early judgments compared to the number produced to day. So that the further back we traced in time to find the influence of judgments on recent ones, the smaller the variation. Perhaps that is not so surprising and we might expect a similar shape if we could follow scientific papers back to the 18th century! patents on the other hand showed a much higher dimension though again these were involved.

It is clear from just the few studies we have made that time makes a crucial difference to the structure of a network. There is surely much more to find when networks are embedded in time.

References:

However what if there are constraints on the links made in a network? Surely we should change the way we study networks if space, time or some other constraint is having a significant effect on the formation or use of the network. This has been a major interest of researchers over the last few years. Space is one obvious limitation as in some cases long distance are less likely to be made. There has been a lot of work in this area over many decades.

It is only more recently that the role of time in networks has began to receive more attention. A lot of this recent interest in how to deal with networks where the connections, are made at one time. That is because most communication networks, emails, phone calls and so forth, are of this type.

Yet networks are made of two parts: vertices and edges. The recent work is focussed on the case where it is the vertices, not the edges, which are created at a definite time. In such temporal vertex networks, causality forces the interactions between nodes to always point in one direction. For example consider a citation network formed by academic papers. The nodes in our network are the academic papers and the links are formed by their bibliographies. So if paper A refers to another paper B then we can be (almost) sure that A was written after B. Information can therefore flow only from B to A. In fact any set of documents can only refer to older ones such networks are common. In law, judges refer to previous judgments to support their arguments. When registering a patent, prior art needs to be cited, that is other previously granted work which may have some relevance to the current claim.

The same types of structure occur in several other situations. Any situation where there is a logical flow has the same causal structure. If we have a project where the nodes represent individual tasks then an edge from task S to task T could represent the fact that task T requires task S to have been completed before task T is started. This has been the focus of work on temporal vertex networks in computer science. The logical flow of a mathematical argument or an excel spreadsheet show the same properties. These networks define what is called a partially ordered set or poset and it is under this title that one finds relevant work coming from mathematicians. A final example comes from the Causal Sets approach to quantum gravity. Here space-time is discrete not continuous, and these discrete points are the nodes of the network. The nodes are connected by edges only if they are causally connected and causality again gives these a common direction.

All of these temporal vertex networks have a key property. That is they contain no loops if you always follow the direction on the edges. You can not go backwards in time. Thus the traditional name for such a network is a directed acyclic networks or DAG for short.

So the question is how can we adapt traditional network measures to deal with the fact that these networks, DAGs, are constrained by causality? Are there new measures we should employ which give more insights to such networks?

One feature of a DAG we have been exploiting is that if we always follow the direction of the arrows, the direction of time, then not all nodes are connected. If we like we could add edges whenever there is such a path connected a late node to an earlier one, a process known as transitive completion. On the other hand we could remove as many edges as we can while leaving the causal relationships intact, a process known as transitive reduction. That is, if there is a path between two nodes in the network before transitive reduction, then there will still be a link afterwards.

| Example of the transitive reduction (left) and the transitive completion (right) of the directed acyclic graph in the centre. |

The number of citations to a document after transitive reduction certainly gives us a different view of the importance of different documents. For instance paper hep-th/9802109 on the arXiv (Gauge Theory Correlators from Non-Critical String Theory by Gubsner et al.) was cited by 1641 papers in the network, but only three citations remained after TR! On the other hand, paper hep-th/9905111 (Large N Field Theories, String Theory and Gravity by Aharony et al.) has also large number of citations in the raw data, 806, yet after transitive reduction it has 77, so retaining far more of its original citations. Perhaps information in the second paper was used more diversely.

We can find similar examples in the US Supreme Court citation network. The case Schneider vs. New Jersey (1939)’ has 144 citations in the original data but this drops to just just one after transitive reduction. Stromberg vs. California (1931) also falls from 132 citations to just one. Conversely, the case Heller vs. New York (1973) only shows a slight fall after transitive reduction, falling from from 68 to 48 citations and has the most citations in our reduced network. The second most cited case after transitive reduction is Hamling vs. United States, which drops from 68 to 38 citations. Wikipedia lists hundreds of Supreme Court cases but the last two are not famous enough to make the Wikipedia list. The analysis suggests they may have more importance than a simple citation count would suggest. At the very least it might be be worth checking out documents that are highly cited in the essential.

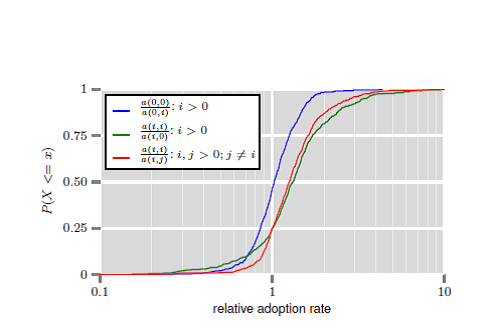

Another way to look at citation networks is to see if we can define a dimension to the network. That is we can try to quantify how much variation there is in the citation process. A low dimension means that there are few directions , few distinct themes relevant for citation in a document. A high dimension indicates that there is a wide range of relevant but distinct directions from which a document will draw on for inspiration. We were often able to assign an interesting value for the dimension of our citation data. For academic papers, we found that different fields of research have different dimensions. For papers in the hep-th arXiv section (largely string theory) we generally find a low dimension of around 2 while for theoretical papers closely linked to particle physics experiments we find more variation as indicated by a higher dimension of 3. The quant-ph also around 3 while the astro-ph section had a slightly higher dimension of around 3.5. So clearly despite similarities in the main data using standard measures, our time-aware dimension measures show clear differences in the citation behaviour of different areas. String theory in particular seems to be a tightly knit collection of work with each work largely dependent on all the other work, few independent directions can be pursued. The US Supreme Court judgments were more complicated. Small samples (usually from modern judgments) showed a dimension of around 2.5 to 3 but larger samples, typically ranging from modern to the earliest judgments, had lower dimensions, closer to 2. We interpreted this as reflecting the way that there were few early judgments compared to the number produced to day. So that the further back we traced in time to find the influence of judgments on recent ones, the smaller the variation. Perhaps that is not so surprising and we might expect a similar shape if we could follow scientific papers back to the 18th century! patents on the other hand showed a much higher dimension though again these were involved.

It is clear from just the few studies we have made that time makes a crucial difference to the structure of a network. There is surely much more to find when networks are embedded in time.

References:

Clough, J.R. & Evans, T.S. What is the dimension of citation space?, arXiv:1408.1274

Clough, J. R.; Gollings, J.; Loach, T. V. & Evans, T. S., Transitive Reduction of Citation Networks, J.Complex

Dowker, F. Causal sets as discrete spacetime, 2006. Contemporary Physics, 47, 1-9

Holme, P. & Saramäki, J. 2012. Temporal Networks Physics Reports, 519, 97-125

Simkin M.V. and Roychowdhury V.P., 2003. Read before you cite! Complex Systems 14 269-274