A Markov chain, named after Andrey Markov, is a mathematical system that undergoes transitions from one state to another on a state space. It is a random process usually characterized as memoryless: the next state depends only on the current state and not on the sequence of events that preceded it. This specific kind of "memorylessness" is called the Markov property.

The importance of directionality in detecting interest communities

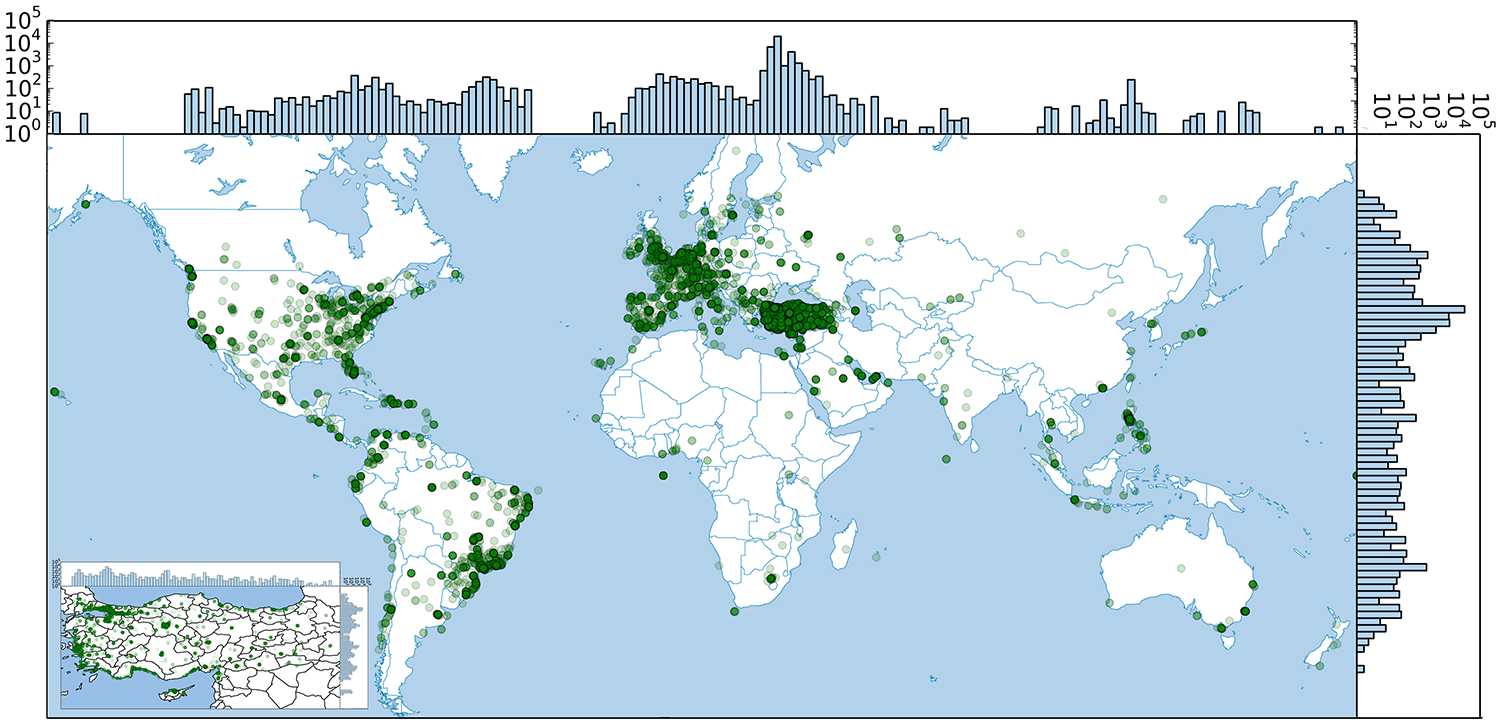

The riots of 6–10 August 2011 in England were followed by an intense public debate about the role and influence of social media during the unrest. Politicians, journalists, pundits and bloggers alike weighed in on the issue, but few arguments were based on data. A few months after the riots, The Guardian made available to the public a list of the 1000 ‘most influential’ (i.e. the most re-tweeted) Twitter users during the riots. The list compiled by The Guardian comprised a diverse set of Twitter users, including newspapers, broadcasting services, news agencies, as well as individual accounts of journalists, politicians, entertainers, global and local activists, and members of the public. To confirm the hypothesis an independent study was done (which is explained below) to analyse the connected components among 1000 listed people, based on twitter directed network.

Flow-based ‘interest communities’: a view of the

network at different resolutions

To gain a structured view of the communities in the network at different levels of resolution, Markov Stability community detection is used. A key advantage of Markov Stability is that it is based on a quantitative criterion that relies on flow propagation and containment, and thus identifies flow communities. The communities so found correspond to ‘interest communities’, in so much as information, interest and influence are propagated, retained and reinforced within them following the edges. If edge directionality is ignored, the community structure is blurred and the analysis severely hindered. A second advantage of the method is that the network is scanned for structure at all scales, and flow communities are found to be relevant at different levels of resolution.

Interest communities at all scales as detected by Markov Stability.

(a) The number of communities at each Markov time (t). The inset shows the network with nodes and edges colored according to their community at four illustrative Markov times. Two of these partitions at different resolutions are shown in more detail.

(b) At relatively short Markov times (t1 = 0.15), we find 149 communities (coarse-grained network view in the centre). Three examples of communities in this partition are ‘police and crime reporting’ (top), ‘Hackney’ (bottom), ‘the Daily Telegraph’ (left) shown with their members and their self-description word clouds.

(c) At longer Markov times (t4 = 7.0) we find four communities (coarse-grained view in the centre): three large communities broadly corresponding to ‘UK’ (bottom-right), ‘international’ (top), ‘celebrities/entertainment’ (bottom-left) and a small one corresponding to the ‘BBC’ (right), which remains as a distinct community across a large span of Markov times.

As a visual aid to interpret the theme of the communities, ‘word clouds’ are created from the most frequently used words in the Twitter self biographies of the users in each community

The importance of directionality in detecting interest communities

Communities containing the account of BBC Radio 4’s Today programme (bbcr4today) in the undirected (top, diamonds) and directed (bottom, circles) versions of the network at Markov times

t = 0.86, t = 1.3 and t = 7.0, along with their word clouds. In the middle it is shown the size of the communities of the Today programme in both versions of the network for Markov times between 0.1 and 10.

As stated above, the BBC is an example of a flow community that stands out in its persistency. In above figure it is shown how the community of BBC’s Today programme (a morning news broadcast with a broad audience) remains consistently grouped across many levels of resolution in the analysis of the directed network: from an early Markov time, BBC-related accounts are grouped together and remain so all the way up to the top levels of resolution, with consistent word clouds throughout. This phenomenon depends strongly on the directionality of the flows: the nodes in the BBC community are among the most important in the network (high in-degree and PageRank), attracting flow (attention) from elsewhere in the network and retaining it for long periods of Markov time.

Whereas in a symmetric network, such communities can go undetected, as shown in above fig., where the corresponding undirected community of the BBC’s Today programme is quickly blurred across Markov times and gets mixed with a variety of users with little in common, consisting mainly of politicians from the Labour Party and journalists.

Interestingly, this drastic difference between directed and undirected communities is not observed for all communities in the network. There are communities, such as the one including Guardian columnist George Monbiot, which behave in an essentially similar manner in both cases across levels of resolution (Figure is shown below).

This difference between communities that are sensitive or insensitive to directionality persists across time scales, signalling the fact that some groupings (such as the BBC community) are fundamentally based on retention of directed flows, while others (such as the Monbiot community) follow from a balanced flow and, thus, can be captured by standard undirected measures. We note that the directed Markov Stability method is able to detect both types of communities simultaneously.

Directed and undirected communities containing the account of George Monbiot (georgemonbiot) obtained from the undirected (top, diamonds) and directed (bottom, circles) networks at Markov times t = 0.8603, t = 1.3049 and t = 7.0, along with their word clouds. We can compare these results with those obtained in figure 2 for BBC Radio 4’s Today programme

Reference:

http://rsif.royalsocietypublishing.org/content/royinterface/11/101/20140940.full.pdf

http://en.wikipedia.org/wiki/Markov_chain

The changes of state of the system are called transitions. The probabilities associated with various state changes are called transition probabilities. The process is characterized by a state space, a transition matrix describing the probabilities of particular transitions, and an initial state (or initial distribution) across the state space. By convention, It is assumed that all possible states and transitions have been included in the definition of the process, so there is always a next state, and the process does not terminate.

Markov time is the time spent in each state before transition to other state.

The importance of directionality in detecting interest communities

Directionality is a crucial ingredient in many complex networks in which information, energy or influence are transmitted. In such directed networks, analysing flows (and not only the strength of connections) is crucial to reveal important features of the network that might go undetected if the orientation of connections is ignored. Clearly, it is not the same to ‘follow’ a widely known personality in Twitter as to be followed by one.

The riots of 6–10 August 2011 in England were followed by an intense public debate about the role and influence of social media during the unrest. Politicians, journalists, pundits and bloggers alike weighed in on the issue, but few arguments were based on data. A few months after the riots, The Guardian made available to the public a list of the 1000 ‘most influential’ (i.e. the most re-tweeted) Twitter users during the riots. The list compiled by The Guardian comprised a diverse set of Twitter users, including newspapers, broadcasting services, news agencies, as well as individual accounts of journalists, politicians, entertainers, global and local activists, and members of the public. To confirm the hypothesis an independent study was done (which is explained below) to analyse the connected components among 1000 listed people, based on twitter directed network.

Flow-based ‘interest communities’: a view of the

network at different resolutions

To gain a structured view of the communities in the network at different levels of resolution, Markov Stability community detection is used. A key advantage of Markov Stability is that it is based on a quantitative criterion that relies on flow propagation and containment, and thus identifies flow communities. The communities so found correspond to ‘interest communities’, in so much as information, interest and influence are propagated, retained and reinforced within them following the edges. If edge directionality is ignored, the community structure is blurred and the analysis severely hindered. A second advantage of the method is that the network is scanned for structure at all scales, and flow communities are found to be relevant at different levels of resolution.

Interest communities at all scales as detected by Markov Stability.

(a) The number of communities at each Markov time (t). The inset shows the network with nodes and edges colored according to their community at four illustrative Markov times. Two of these partitions at different resolutions are shown in more detail.

(b) At relatively short Markov times (t1 = 0.15), we find 149 communities (coarse-grained network view in the centre). Three examples of communities in this partition are ‘police and crime reporting’ (top), ‘Hackney’ (bottom), ‘the Daily Telegraph’ (left) shown with their members and their self-description word clouds.

(c) At longer Markov times (t4 = 7.0) we find four communities (coarse-grained view in the centre): three large communities broadly corresponding to ‘UK’ (bottom-right), ‘international’ (top), ‘celebrities/entertainment’ (bottom-left) and a small one corresponding to the ‘BBC’ (right), which remains as a distinct community across a large span of Markov times.

As a visual aid to interpret the theme of the communities, ‘word clouds’ are created from the most frequently used words in the Twitter self biographies of the users in each community

The importance of directionality in detecting interest communities

Communities containing the account of BBC Radio 4’s Today programme (bbcr4today) in the undirected (top, diamonds) and directed (bottom, circles) versions of the network at Markov times

t = 0.86, t = 1.3 and t = 7.0, along with their word clouds. In the middle it is shown the size of the communities of the Today programme in both versions of the network for Markov times between 0.1 and 10.

As stated above, the BBC is an example of a flow community that stands out in its persistency. In above figure it is shown how the community of BBC’s Today programme (a morning news broadcast with a broad audience) remains consistently grouped across many levels of resolution in the analysis of the directed network: from an early Markov time, BBC-related accounts are grouped together and remain so all the way up to the top levels of resolution, with consistent word clouds throughout. This phenomenon depends strongly on the directionality of the flows: the nodes in the BBC community are among the most important in the network (high in-degree and PageRank), attracting flow (attention) from elsewhere in the network and retaining it for long periods of Markov time.

Whereas in a symmetric network, such communities can go undetected, as shown in above fig., where the corresponding undirected community of the BBC’s Today programme is quickly blurred across Markov times and gets mixed with a variety of users with little in common, consisting mainly of politicians from the Labour Party and journalists.

Interestingly, this drastic difference between directed and undirected communities is not observed for all communities in the network. There are communities, such as the one including Guardian columnist George Monbiot, which behave in an essentially similar manner in both cases across levels of resolution (Figure is shown below).

This difference between communities that are sensitive or insensitive to directionality persists across time scales, signalling the fact that some groupings (such as the BBC community) are fundamentally based on retention of directed flows, while others (such as the Monbiot community) follow from a balanced flow and, thus, can be captured by standard undirected measures. We note that the directed Markov Stability method is able to detect both types of communities simultaneously.

Directed and undirected communities containing the account of George Monbiot (georgemonbiot) obtained from the undirected (top, diamonds) and directed (bottom, circles) networks at Markov times t = 0.8603, t = 1.3049 and t = 7.0, along with their word clouds. We can compare these results with those obtained in figure 2 for BBC Radio 4’s Today programme

Reference:

http://rsif.royalsocietypublishing.org/content/royinterface/11/101/20140940.full.pdf

http://en.wikipedia.org/wiki/Markov_chain